The details we notice, and those we ignore

This week, we take a deeper look at perception and reality. The details that we pay attention to are shaped by our experience, and in turn shape our worldview, which we then use to program computers to see or not see particular aspects of what they perceive. It’s a strange ouroboros of perception and bias that we’re continually trying to untangle.

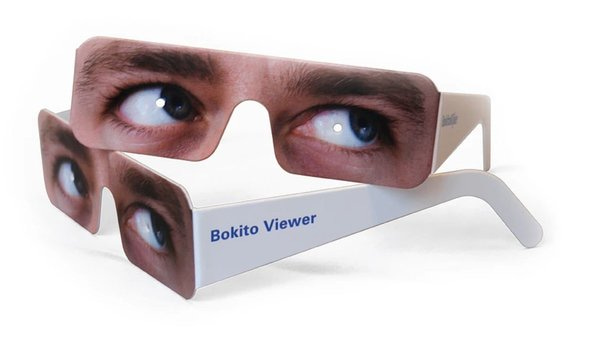

Plus, a weird face mask to keep baboons from attacking you at the zoo.

—Matt & Alexis

1: Everything is fiddly

This John Salvatier blog post is not specifically about ethics, or technology, or design, but it describes a way of thinking that shapes how we might approach all three. He illustrates the way that seemingly simple things contain an unexpected amount of details and problems to solve once you actually engage with them. “If you’re a programmer, you might think that the fiddliness of programming is a special feature of programming, but really it’s that everything is fiddly, but you only notice the fiddliness when you’re new.” This is really the essence of expertise and craftsmanship — understanding those details, and having repeated a process enough to have developed a point of view on them.

When we do talk about ethics and design and technology, it’s often in broad strokes, which is kind of boring because we tend to agree at a high level about ethical goals. One of the reasons we started Ethical Futures Lab is because we wanted to talk about the details and the execution. That’s where it actually gets interesting, and it’s where practitioners have to make complicated and nuanced choices.

Salvatier also points out that our perspective on the details embedded in our reality can lead to what he calls getting “intellectually stuck”: “Stuck in your current way of seeing and thinking about things. Frames are made out of the details that seem important to you. The important details you haven’t noticed are invisible to you, and the details you have noticed seem completely obvious and you see right through them.”

Reality has a surprising amount of detail

My dad emigrated from Colombia to North America when he was 18 looking looking for a better life…

2: "Normalizing" reality

Last week, millions of residents of Northern California looked outside at an otherworldy orange sky. Millions of acres of land all over the state, and into Oregon and Washington, were ablaze, and smoke covered a significant portion of the country. San Franciscans began to post photos of the phenomenon but soon realized that their phones were trying to color correct their images, making the orange sky duller and grayer. The cameras had a concept of what “normal” would typically look like, and when the world deviated from that norm, their programming adjusted to better fit that model.

Also last week, A Canadian PhD candidate was trying to help a colleague get his Zoom set-up just right for their upcoming remote classes, and couldn’t figure out why his Black colleague’s head kept disappearing when he activated the Virtual Background feature. It wasn’t until this colleague stood in front of a shiny globe, which appeared just above and behind his head, that his head was interpreted properly as foreground and not background. When their test images were posted to Twitter, Twitter’s image cropping algorithms kept favoring the white colleague’s portion of the photo, even if their positions were reversed. While we can’t know exactly what data these algorithms used for training purposes, it’s clear their results quite literally erase people of color.

We present these two cases not to draw an equivalence, but to indicate how the systems that surround us are based on deeply-ingrained — and often very incorrect — concepts of what “normal” looks like. These issues are not new; since the 1950’s Kodak produced so-called “Shirley” cards (named for the young, white model they featured) to calibrate film cameras, with the word “normal” prominently featured on the card.

Biased tests, skewed training data, and naïve machine learning routines persist, and we must continue to identify how they affect how we see the world around us. We can begin by rejecting the concept of a universal norm; what is optimal for one situation will be far less so in another, so we must allow our systems to be adjusted when assumptions fail.

Why Can't My Camera Capture the Wildfire Sky?

Why the orange sky looks gray

3: Unintended consequences in Animal Crossing

While we’ve talked quite a bit about disinformation, abuse, and other problematic conduct in the context of social platforms, this piece from Slate posits that video games should be prepared for similar issues. As gaming platforms have become more flexible and fluid spaces for all kind of interactions, they may be ripe environments for political discourse. The article cites recent events, like the Biden-Harris lawn signs available in Animal Crossing and the racial justice panel recently hosted in Fortnite (where audience members used an in-game mechanic to throw tomatoes at CNN commentator Van Jones). As political activity makes its way on to these platforms, it would behoove them to proactively develop policies and constraints that work to prevent the kinds of misinformation, disinformation, and abuse we’ve seen in other contexts.

Disinformation will come for Animal Crossing

Learn from social media’s mistakes, or else you’re doomed to repeat them.

4: The end of privacy: a thought exercise

What would happen if everyone’s personal data were to be leaked publicly, all at once? Web searches, credit card numbers, chat logs—all secrets suddenly made not-so-secret at a global scale? Gizmodo asked a handful of experts to weigh in on this potential future scenario, sharing their analysis of what a post-privacy society might look like. Some respondents theorized about how society’s norms and morals might change, while others dove into the logistics of how we might re-establish and validate personal data on a tactical level.

What Would Happen If All Personal Data Leaked at Once?

Subconsciously, on some level, we’re all waiting for it: the leak that wrecks society and confirms what we all know already…

5: AI written op-eds

In perhaps the most direct demonstration of machine learning’s capacity to create readable text, The Guardian asked GPT-3, a language generator, to write an op-ed that aimed to convince people that robots were peaceful and not looking to eradicate humanity. Sure.

Leaving aside the fact that the “prompt” was the entire first paragraph (and fairly wooden prose to begin with), the result is a compelling piece of reading. It doesn’t advance much of an argument beyond “I’m not programmed to think about killing people, so I don’t” and there are still definite traces of a system that doesn’t fully understand syntax and structure, but it’s certainly a far more convincing output than others we’ve seen.

We believe in the value that machine learning systems can bring to many job functions, particularly ones where massive amounts of data must be understood quickly. That said, we believe AI is better seen as a partner to a more intuitive human way of thinking, not as a replacement. Stunts like these set up an antagonistic relationship between people and machines rather than examining the more interesting potential for collaboration.

A robot wrote this entire article. Are you scared yet, human?

We asked GPT-3, OpenAI’s powerful new language generator, to write an essay for us from scratch. The assignment? To convince us robots come in peace

6: New Zealand's charter for algorithms

The issue of algorithmic transparency — i.e. “what is that computer thinking” — comes up in nearly every issue of Six Signals; it’s come up twice already in this issue alone. Understanding what decisions are being made and why is a key component to developing more trust in algorithms and their outputs.

To foster this trust and to be open about a system’s biases, the government of New Zealand recently developed the world’s first set of guidelines on how algorithms are used by the country’s public agencies. Systems must allow for peer review to avoid “unintended consequences,” must be developed and used only with input from Te Ao Māori Indigenous New Zealanders, and must provide a “plain English” explanation of the factors that went into any decision the system makes. Given that these systems may affect everything from welfare and unemployment benefits to when and how to launch military strikes, trust developed through transparency will theoretically help New Zealanders have confidence that their government is accountable. However, it will be interesting to see how this charter is implemented, from figuring out what exactly counts as an algorithm all the way to what transparency actually looks like in practice.

New Zealand Has a Radical Idea for Fighting Algorithmic Bias: Transparency

From car insurance quotes to which posts you see on social media, our online lives are guided by invisible, inscrutable algorithms.

One "avert your eyes" thing

We’ve previously covered attempts at so-called “gaze correction” in video chat applications, where computer scientists are trying to get people to make more perceived eye contact with one another. This is the opposite of that, and way more hilarious. These low-tech glasses were distributed to visitors at the Rotterdam Zoo so that they could seem to avert their gaze in front of gorillas, who are liable to perceive direct eye contact as aggressive behavior.

love these ridiculous paper glasses designed to avert the zoo visitor's gaze, to avoid inadvertent aggression towards gorillas https://t.co/4luHUQnyeq https://t.co/84DmRRkZjQ

Six Signals: Emerging futures, 6 links at a time.

If you don't want these updates anymore, please unsubscribe here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue